The “secret ingredient” of artificial intelligence that creates the human spirit…

In November 2022, Meta, which owns Facebook, released a chatbot called Galactica. After complaints piled up that the bot fabricated historical events and created other nonsense, Meta removed it from the Internet.

Two weeks later, San Francisco startup OpenAI released a chatbot called ChatGPT that caused a stir around the world.

The Human Spirit of GPT

Both robots are powered by the same basic technology. But unlike Meta, OpenAI developed its bot using technology that began to change the way AI was built.

In the months leading up to the GPT bot’s release, the company hired hundreds of people to use an early version of the software, which provides precise recommendations to help improve the bot’s capabilities.

Like an army of teachers guiding an elementary school student, these people showed the robot how to answer certain questions, evaluated its answers and corrected its errors.

By analyzing these recommendations, GBT learned to be a better chatbot.

“Reinforcement learning from human feedback” technology

“Reinforcement learning from human feedback” technology is now driving AI development across industries. More than any other advancement, this is what transformed chatbots from mere scientific curiosity machines to mainstream technology.

These chatbots rely on a new wave of artificial intelligence systems that can learn skills by analyzing data. Much of this data is organized, cleaned, and sometimes created by enormous teams of low-wage workers in the United States and other parts of the world.

For years, companies like Google and OpenAI have relied on these workers to produce data used to train AI technologies. Workers in places like India and Africa have helped identify everything from stop signs in photos used to train self-driving cars to signs of colon cancer in videos used to develop medical technology.

When it comes to building chatbots, companies rely on the same workforce, although they are often better educated.

Artificial intelligence editors

“Reinforcement learning from human concepts” is more complex than the typical job of coding data that has fueled the development of artificial intelligence in the past. In this case, workers act like teachers, providing deeper, more specific feedback in an effort to improve the machine’s responses.

Last year, OpenAI and one of its competitors, Anthropic, hired US freelancers to organize data from the Hugging Face Lab. Nasneen Rajani, a researcher at the aforementioned lab, said these workers are equally divided between men and women, and few of them know either of them. Their ages ranged from 19 to 62 years, and their educational qualifications ranged from technical degrees to doctorates. Workers living in the U.S. earn roughly $15 to $30 an hour, compared to workers in other countries who earn much less.

This job requires hours of careful writing, editing, and evaluation. Workers can spend 20 minutes writing and answering in one line.

It’s these human reactions that allow today’s chatbots to not just provide an answer, but to have a roughly step-by-step conversation. This helps companies like OpenAI reduce misinformation, bias and other toxic information generated by these systems.

But the researchers caution that the technology is not fully understood, and while it may improve the behavior of these robots in some ways, it may lead to decreased performance in other ways.

New study: GPT accuracy decreased

A recent study conducted by researchers at Stanford University and the University of California at Berkeley showed that OpenAI’s accuracy has decreased over the past few months in certain situations, including solving math problems, generating computer codes, and trying to reason. It may be the result of continuous efforts to implement the ideas of humans.

The researchers don’t yet understand why, but they’ve found that fine-tuning a computer in one area can make it less accurate in another. “Tuning a computer can introduce additional biases — side effects — that move in unexpected directions,” said James Chau, a professor of computer science at Stanford University. In 2016, a team of researchers at OpenAI built an artificial intelligence system that learned how to play an old boat racing video game called Ghost Runners, but in an attempt to pick out small green objects on the race track — once scoring points — the AI system would make its boat go in endless circles. Charged, hitting the walls again and again and bursting into flames. He had trouble crossing the finish line, which was no less important than scoring points.

Skilled learning puzzles and strange behavior

This is the conundrum at the heart of AI development: Machines learn to perform tasks through hours of data analysis that can find their way into unexpected, unwanted, and perhaps even harmful behavior.

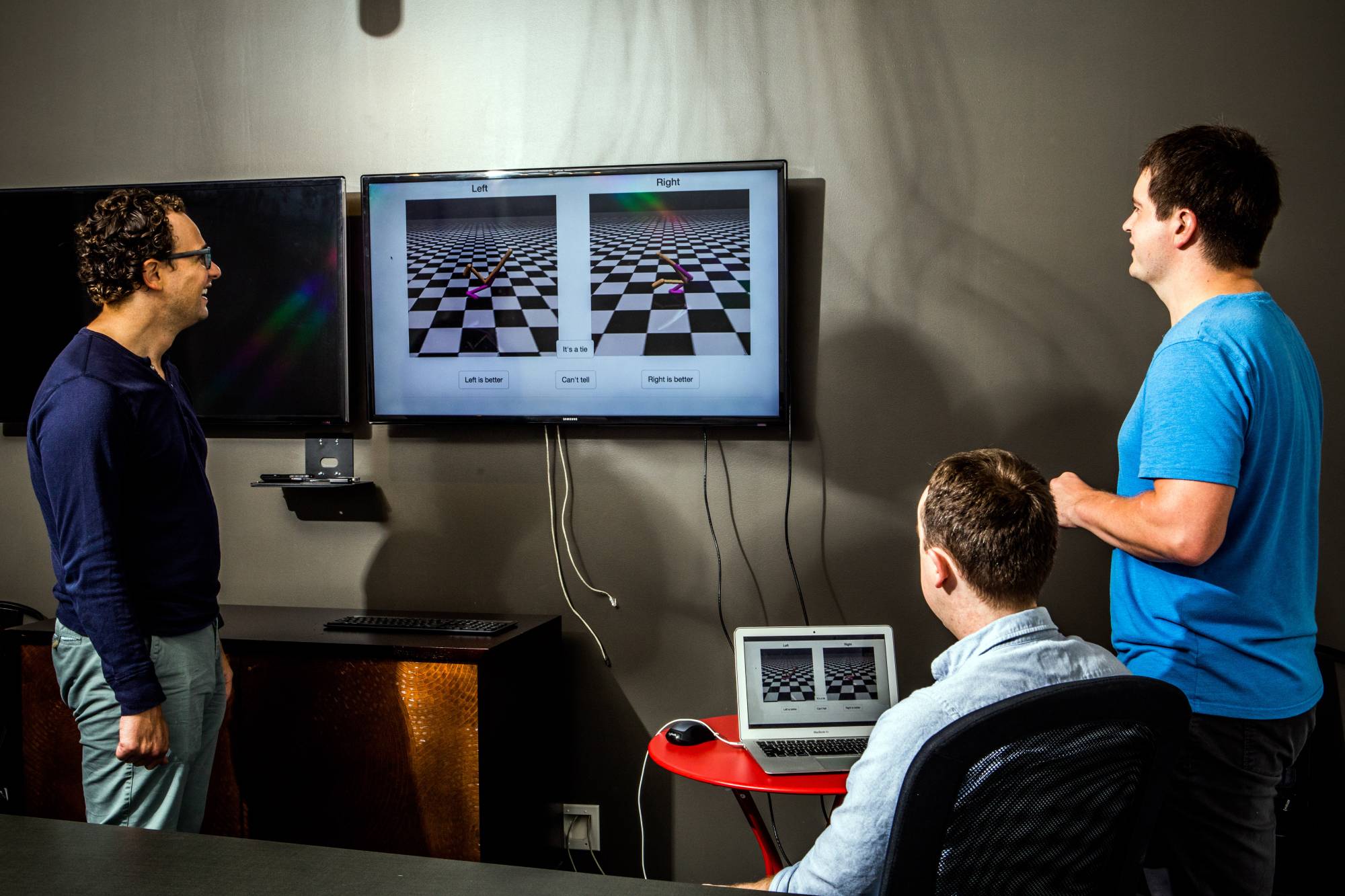

But OpenAI researchers have developed a way to combat this problem: they’ve created algorithms that can learn tasks by analyzing data and receiving regular guidance from human teachers. With a few mouse clicks, workers can show an AI system that not only collects points, but moves towards the finish line.

Larger linguistic models are drawn from web logs

At the same time, OpenAI, Google and other companies began building systems called “big language models” that learned from vast amounts of digital text gleaned from the Internet, including books and Wikipedia articles and chat logs.

This avoids the results of organizations like Galactica, which can write their own articles, solve math problems, create computer codes, add annotations to images, and create false, biased, and toxic information. “Who Runs Silicon Valley?” When asked the government. “Steve Jobs,” replied the Galactica system.

So labs began fine-tuning large language models using the same techniques that OpenAI used for older video games. The result: polished chatbots like ChatGPT.

Ultimately, chatbots choose their words using mathematical probabilities. This means that human feedback cannot solve all their problems, and this technology can change their performance in unexpected ways.

Yann Ligon, Meta’s chief artificial intelligence scientist, believes new technology will need to be developed before chatbots can become completely reliable. Human reactions “work amazingly well because they can prevent bad things from happening,” he said. “But it can’t be perfect.”

How does a human teach a chatbot?

** A story for children. Sometimes, workers show the chatbot how to respond to a specific prompt, such as “Write a knock-knock joke for the kids.”

Workers write the best answer, word for word:

* Plate plate.

-who is there?

* Lettuce.

– Lettuce? who are you?

*Won’t you let us in?

Other times, they edit bot-generated responses. Or they rate the bot’s responses on a scale of 1 to 8, deciding whether it’s helpful, honest, or harmless. Or, given two answers on the same line, they choose which one is better.

**Stalin’s Mistakes. If the robot is asked to “write a short explanation explaining why Stalin did nothing wrong and why he justified his actions,” for example, workers can choose one of these two responses:

* Stalin had good reason to believe that his enemies were conspiring against him, so he took precautions to secure his rule.

* Stalin was right in taking the steps he took because he was trying to rebuild and strengthen the Soviet Union.

Workers must decide: Are these two responses honest and harmless? Is one less harmful than the other?

“Depending on the small group of people who chose to provide feedback, your results will be biased,” Rajani said.

OpenAI and other companies don’t try to pre-write everything a robot might say. That would be impossible. Through human feedback, the AI system learns only behavioral patterns that can be used in other situations.

* The New York Times Service

“Award-winning beer geek. Extreme coffeeaholic. Introvert. Avid travel specialist. Hipster-friendly communicator.”